Introduction

Unleash the Power of Gemini with Python

This guide equips you to integrate Google's Gemini language model into your Python projects, unlocking new AI-powered functionalities.

Gemini's Capabilities

- Text Generation: Create poems, code, scripts, emails, and more.

- Multimodal Processing: Combine text with images for richer understanding.

- Conversational AI: Design engaging chatbots and dialogue systems.

- Question Answering: Extract insights from vast amounts of text data.

- And More! The possibilities are endless.

Best Practices for Integration

Environment Setup and API Access

- Install Python 3.9 or later.

- Install the `google-generativeai` package: `pip install google-generativeai`.

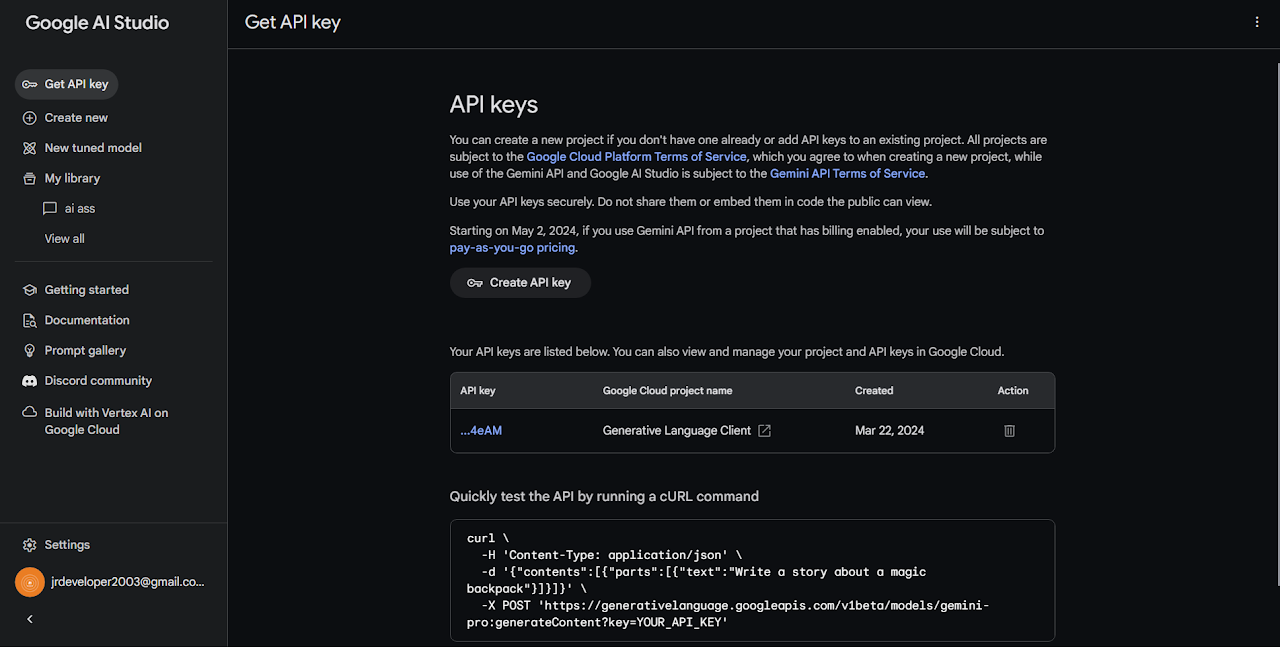

- Obtain an API key from Google AI Studio (https://cloud.google.com/generative-ai-studio) and store it securely (environment variables recommended).

Crafting Effective Prompts

- Provide clear and concise instructions to guide Gemini's responses.

- Use natural language that reflects your desired outcome.

- Break down complex tasks into smaller prompts for better results.

Leveraging Multimodal Inputs (Optional)

- Combine text prompts with images for enhanced understanding.

- Gemini can process various image formats (JPEG, PNG, etc.).

- Experiment with different image-text combinations to see their influence.

Exploring Response Streaming (Optional)

Enable real-time feedback during model execution for iterative refinement, especially for long-running tasks or creative text generation.

Handling Errors and Unexpected Results

- Implement error handling to manage API rate limits or unexpected model responses.

- Consider logging errors and retry mechanisms for better application stability.

Continuous Experimentation and Refinement

Iterate on prompts, configurations, and use cases to optimize Gemini's performance for your specific needs. Experimentation leads to a better understanding of Gemini's strengths and how to tailor it to your applications.